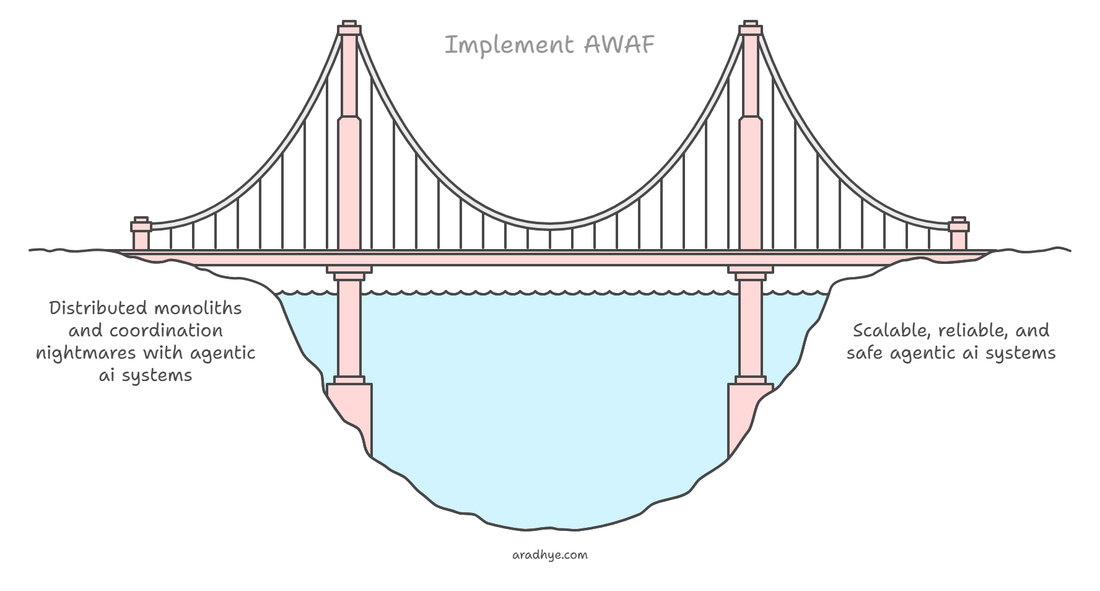

Every team is bolting agentic AI onto everything right now. It's giving pure Agent Smith energy. And we're about to pay the same price we paid with microservices unless we build a framework to stop it.

Are We Building AI Agents Like We Built Microservices? (And Paying the Same Price?)